„How might we humanize payments with a better customer experience taking advantage of artificial intelligence tech?“ was the question I asked in my last article. Is it possible to create additional value, something improved, something gentler or more appealing, something more human for the consumer combining the possibilities of artificial intelligence, the needs of people and differentiation factors for businesses? How can data enable such new things around payments? Should this be done by payment companies, is it rather a commerce task or what other businesses should be involved?

Before diving deeper into the questions and sharing expert interviews I would like to start with some definitions: What is Artificial Intelligence?

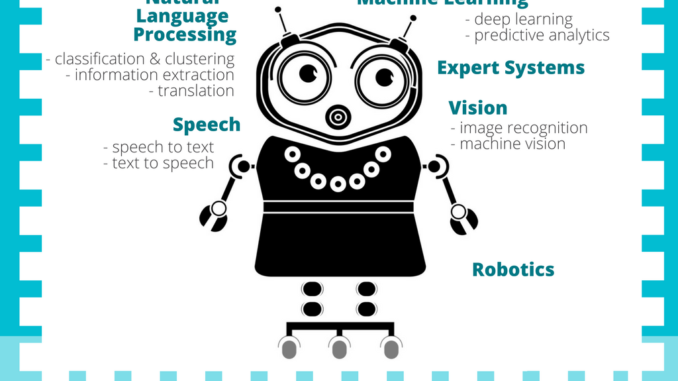

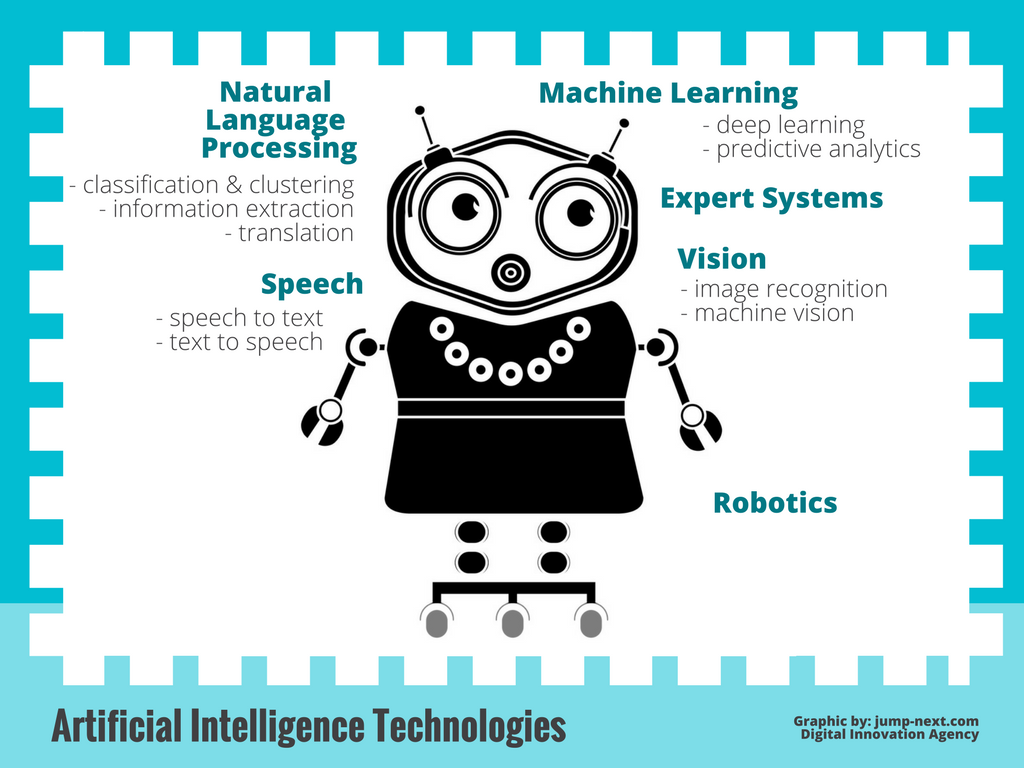

Artificial Intelligence Technologies – Overview

One definition of Artificial Intelligence: “development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.”.

The following tech branches can be classified under the umbrella of AI. In this article series, AI is understood as the usage of one or several of these technologies:

If one wanted to associate the technologies to human capabilities, most of them are linked to skills around the head of a person (eyes, ears, mouth, brain). However, to figure out best use cases for these tech, it might be vital to not only get your head around it but also get your heart around it :-)

Speech (Voice) + Natural Language Processing (NLP)

Computer systems can understand human speech, the error rate has come down from 26% to 4% in recent years. The success of Amazon Alexa is only one example of the growing number of successful services. In my last article I shared the example of “Finie” allowing you to speak to your bank account like speaking to a human. ING Direct is working into a similar direction, calls it “conversational banking” and the CIO says: “the technology mostly bedded down, it’s now a matter of working out – with the business – how to actually use it… the opportunities are vast”.

It is also possible to shop on Alexa, products can be ordered at amazon, the payment is fully integrated in the background, it may be called “voice commerce” or “conversational commerce”.

And voice is a biometric identifier, it could also allows to authenticate a person using voice instead of a PIN or a password, charge the purchase to the right person when shopping in a multi-person household.

Definitions: Speech: 1. “speech to text” allows to capture words that are spoken by a human and transcript them into text as you can observe in your Alexa app which shows you the text of the words it captured every time you speak to it and 2. “text to speech” allows to speak back to the human once an answer has been created by the machine. Natural Language Processing allows to understand what the text words mean. Natural language processing (NLP) can be defined as the ability of a machine to analyze, understand, and generate human speech. The goal of NLP is to make interactions between computers and humans feel exactly like interactions between humans and humans.

Machine Learning

Speech and NLP allow to have a conversation. However, to create a relevant and meaningful conversation, it needs even better understanding. This is where machine learning comes into play. To precisely understand what context a voice articulated question is related to and what the expected or best answer might be, can be figured out by algorithms based on machine learning and deep learning.

Deep learning is used by Google in its voice and image recognition algorithms, by Netflix and Amazon to decide what you want to watch or buy next, and by researchers at MIT to predict the future.

Having said that, expert systems and analytical algorithms have been around for a while to produce answers to questions. But with the increasing volume and variety of data, in many cases it becomes more interesting to use machine learning and deep learning to understand situations and to predict future behavior or preferences.

A chatbot, for example, might use machine learning to understand why a customer calls the service center, how to best answer the questions and automatically suggest the relevant documents. For now, most of the existing chatbots are still based on expert rule systems giving a predefined answer once they have categorized a question in a certain box. It’s the traditional “if this then that” algorithm.

More sophisticated chatbots learn on their own with each treated case to improve their answers (machine learning) – just like a human apprentice would learn over time. For example, the new mobile-only bank in France by mobile operator Orange has partnered with IBM Watson to use AI in its customer service. Once the service chatbot has learned about the customer and her past spending behavior, it could make recommendations such as an amount to save each month. Apparently, the bot is even able to recognize emotions such as a stressed customer. However, the bank has to launch and to learn yet.

Another “employer of Watson” is French bank Crédit Mutuel. Watson virtually assists 20.000 customer advisors in 5.000 branches to answer email inquiries from customers. It shows most urgent inquiries with priority, it suggests answers for the advisors and seeks related documents. However, Watson never answers on its own, it’s just helping the advisor to save time. According to Crédit Mutuel, Watson gets it right in nearly 90% of the cases and allows advisors to find answers 60% faster than before.

Some shorter and more technical definitions: Machine Learning is an application of artificial intelligence that automates analytical model building by using algorithms that iteratively learn from data without being explicitly programmed where to look (Wikipedia) Deep Learning is a subfield of machine learning concerned with algorithms inspired by the structure and function of the brain called artificial neural networks (machinelearningmastery). Predictive Analytics is used to make predictions about unknown future events. It uses many techniques from data mining, statistics, modeling and machine learning to analyze current data to make predictions about the future (predictiveanalyticstoday).

Vision

Image recognition made very good progress in recent years, error rates are down from 28% to about 7%. This means a computer can distinguish if there is a dog in the picture or if it is a cupcake looking like a dog face.

French startup Heuritech, for example, uses image recognition on Instagram pictures to identify fashion trends in real-time. Emerging product buzzes can then be put forward on the ecommerce site of e-retailers.

Online travel club Voyage Privé is analyzing pictures a user looked at on its website and applies “transfer learning”. For example, if a user looks at many hotels with a pool, the recommendation engine will suggest more destinations with a pool. It might also adapt the header picture of a destination according to preferred pictures.

Amazon is using a different type of computer vision in its Amazon Go stores. The convenience supermarkets allow customers to check-in, take products and leave the store without checkout. Cameras are observing what the customer took, put back, etc. and registers the shopping basket automatically.

Definitions: Image Recognition is the ability of software to identify objects, places, people, writing and actions in images (techtarget). Machine Vision is the ability of a computer to see; it employs one or more video cameras, analog-to-digital conversion (ADC) and digital signal processing (DSP). The resulting data goes to a computer or robot controller (techtarget).

AI in payments – Hype or Reality?

Well, you might think that the header of this article states “Artificial Intelligence in Payments” but most of the examples given in this text are in banking or other industries, not payments. And yes, you are right. I have been looking for examples how AI improves the customer experience (CX) in existing payment offerings. AI in payments beyond fraud & risk. In a fast and easy research those kinds of examples don’t pop up on the first page. Payment companies are talking about the potential and that they are working on it. Such as Klarna’s CEO Sebastian Siemiatkowski says: “Going forward, a fintech company’s success will depend on the power of its AI, artificial intelligence, and UI, user interface. Those are the only things that matter [for a customer]. Which UI do I prefer to use for financial services, and who takes my data and creates the smartest things out of it? I prefer to use those who are the smartest.”

If you feel that you have a good example, please send me an email, LinkedIn or Twitter message.

Stay tuned, we will catch up on these questions…

Thank you for the auspicious writeup. It in fact was a amusement account it. Look advanced to more added agreeable from you! However, how could we communicate?disabledupes{f44c65a98c4e6917a6e790f8824ec43a}disabledupes

It’s a shame you don’t have a donate button! I’d most certainly donate to this excellent blog! I suppose for now i’ll settle for book-marking and adding your RSS feed to my Google account. I look forward to new updates and will talk about this blog with my Facebook group. Talk soon.disabledupes{7be466c4ec46ec0f2660fb7201fad150}disabledupes